- EasyCard

- Trade

- Help

- Announcement

- Academy

- SWIFT Code

- Iban Number

- Referral

- Customer Service

- Blog

- Creator

Nvidia Stock Price Set to Soar: From GPT-4 to O3, Who Will Seize the Tech Dividend?

OpenAI’s recent release of the O3 model is sparking a storm of demand for GPUs, especially for Nvidia’s inference chips. Nvidia is currently poised to meet this demand through two key areas: pre-training computation and testing-time computation. With its technological leadership and strong supply chain control, Nvidia seems to be standing at the crest of this wave of demand.

Although Nvidia currently holds an absolute market dominance, competition is becoming increasingly fierce as more large enterprises ramp up their hardware investments.

O3 Model Breakthrough Drives Nvidia’s Hardware Demand

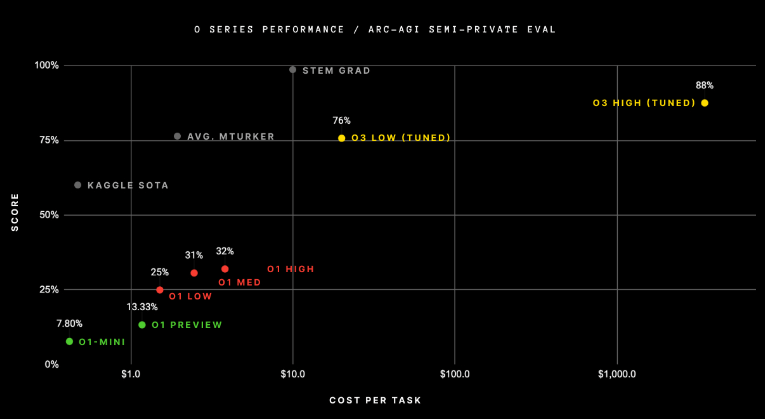

The recent release of OpenAI’s O3 model marks a huge leap in the field of artificial intelligence. This model scored an impressive 75.7% on the ARC-AGI-Pub benchmark test, demonstrating a major advancement in AI technology. It is not just a small step beyond GPT-4, but a true revolution, reshaping our understanding of intelligent machines’ potential.

For years, advanced large language models (LLMs) have struggled to tackle more innovative tasks. In fact, GPT-3 scored 0% on the ARC-AGI test, and GPT-4 didn’t show much significant improvement. However, the O3 model has broken through these old limitations, proving that powerful and flexible real-time inference is no longer an unattainable dream but a reality. It’s worth noting that the testing-time computation model of the O series is still in its early stages, meaning that future versions after O3 may exhibit even greater capabilities. However, this breakthrough also presents new challenges—the enormous demand for inference computation.

O3’s success wasn’t achieved by simply increasing the pre-training model’s size but by placing higher demands on computational power during the inference stage. Each query isn’t just a simple answer lookup but involves deep searching in a vast “program space,” involving tens of millions of tokens and requiring massive GPU computational resources. This new computational mode will drive a surge in demand for inference chips, requiring billions of dollars in accelerator investments.

More importantly, the scalability of testing-time computation currently shows no apparent limits, meaning demand will continue to grow exponentially.

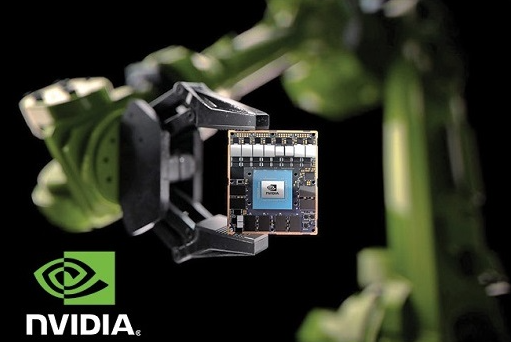

At this critical moment of technological transformation, Nvidia stands out due to its leading AI infrastructure capabilities. Over the years, Nvidia’s GPUs have been the industry standard for training large language models. Now, with O3 leading the way in AI development, inference computation demand has become the driving force of the industry.

Nvidia’s global supply chain management gives it a unique competitive advantage in meeting this demand. Nvidia is ready to support hardware for high-computational-demand models like O3 with thousands of GPUs, DPUs, dedicated memory, and networking devices.

O3: A Qualitative Leap in AI Inference

Large language models (LLMs) have been widely recognized for their powerful pattern recognition capabilities but have often fallen short when tackling entirely new tasks. Take GPT-4 and other similar models (like Claude or Gemini) as examples: they perform excellently on familiar tasks but struggle when faced with challenging, innovative problems.

In the past, it was believed that “larger models naturally mean smarter ones,” but this view is now being questioned.

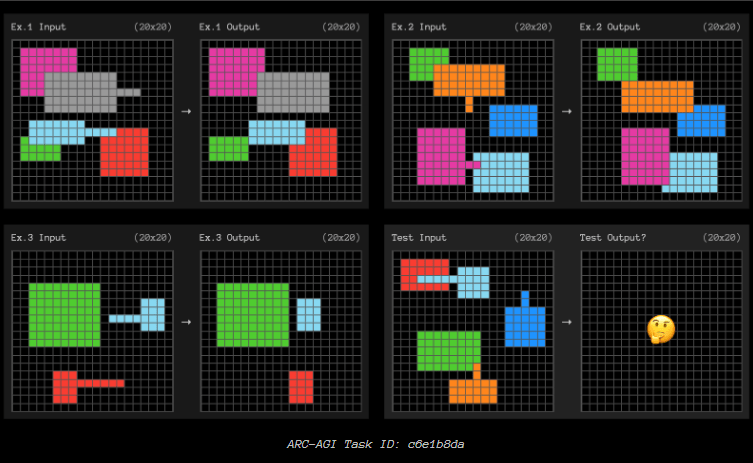

OpenAI’s O series models have brought a breakthrough. Through a combination of intensive testing-time computation, inference chains, and adaptive solutions, O3 successfully broke through this bottleneck, yielding results beyond expectations.

Unlike earlier models that relied on executing static content quickly, O3 coordinates complex reasoning processes intricately, enabling efficient resolution of each query. This means the demand for inference clusters that support “large-scale real-time search” will increase, further driving hardware technological advancements and greater dependence on computational resources.

Here are some task performances from the ARC test:

The Importance of Testing-Time Computation

In the past, AI’s demand for hardware mainly stemmed from the training of larger, more complex models. While the training process for these models is costly and requires substantial computational resources, once training is completed, the computational demand during inference tends to be relatively stable and predictable.

However, with the breakthrough of the O3 model, testing-time computation is entering a new phase. Imagine that in the future, each complex problem might trigger a massive token search, involving billions or even trillions of parameters.

As AI continues to expand into business operations, consumer services, and even national-level data centers, the number of these computational tasks is expected to grow explosively.

More importantly, this expansion in inference demand seems limitless. As demand for smarter, more perceptive AI rises, the intensity of inference computations will also increase. Every new model release almost guarantees an expansion of inference computation demand, meaning we will need more GPUs to meet this growth.

For Nvidia, this is not only a technological breakthrough but also a powerful driver for its stock price to continue rising. As the demand for testing-time computation surges, Nvidia, with its market-leading position in AI infrastructure, will benefit greatly from this demand explosion. The stock price will continue to rise alongside this trend, and investors can expect Nvidia to become the biggest beneficiary of the global expansion in inference computation demand in the coming years.

From GPUs to AI Factories: Nvidia’s Hardware Empire

Nvidia’s position in the global supply chain is nearly unmatched. Not only does it hold cutting-edge technology, but it has also established broad partnerships with major global companies, making it the only company capable of meeting the vast demand for inference.

Imagine that to cope with such enormous demand, simply manufacturing GPUs is not enough. Nvidia must manage a complete supply chain from start to finish, including advanced memory, ultra-fast networks, dedicated connectors, DPUs, racks, cooling systems, and even the operating systems and software stacks between these components.

For decades, Nvidia has built a robust ecosystem through close collaborations with top suppliers like Micron, InfiniBand, and Spectrum-X, giving it an irreplaceable advantage in the industry, far ahead of competitors like AMD.

In this new era of inference computing, inference is no longer a simple task. Cloud computing companies, AI firms, and even traditional large enterprises are actively seeking Nvidia’s hardware solutions to deploy their efficient AI models. These companies need not just a single GPU, but massive clusters capable of handling complex and ongoing inference tasks. Thanks to Nvidia’s strong supply chain advantage, it can rapidly scale up data center construction and deliver optimal configurations to clients.

Recently, Nvidia also introduced the concept of an “AI Factory,” offering clients a one-stop, pre-packaged AI data center solution.

How to Address the Surge in Future Inference Computation Demand

Nvidia’s future success will depend on how it handles the rapid growth of inference computation demand. While training larger models is beginning to hit technological bottlenecks, the demand for inference computation is still in its early stages, and as more AI models reliant on inference emerge, these computations will become more complex and require significantly more computational resources.

The scalability of inference computation means that AI, in making decisions, will no longer just rely on pre-trained models but will need to continuously compute and infer in real-time as it processes large amounts of data. This process will not only take time but will require massive computational power. As AI applications proliferate, especially in enterprise and consumer services, inference computation demand is expected to grow explosively.

However, Nvidia has the technological infrastructure to handle these challenges easily. By continually expanding its computing clusters (essentially adding more computing devices to share the load), accelerating network speeds (improving data transfer speeds between devices), and optimizing software configurations (making systems run more efficiently), Nvidia can ensure it meets future demand.

Moreover, Nvidia’s CUDA framework, a programming tool that helps programs run more efficiently, has become the industry standard, ensuring Nvidia has a clear advantage in the competition.

Innovation and the Wallet: Double Impact

Nvidia’s recent financial performance is nothing short of jaw-dropping. In Q3 of FY2025, its revenue soared to $35.1 billion, a 94% year-on-year increase. This growth is not just due to the O3 model breakthrough but also because of its revolutionary progress in testing-time computation, with Nvidia rapidly expanding its market share and preparing for an even more brilliant future.

As inference computation demand grows, inference costs may rise in the short term, but Nvidia isn’t worried. With its massive scale and operational capabilities, Nvidia can negotiate with suppliers to secure more competitive prices than its competitors while continuously improving system efficiency. This is why Nvidia has achieved remarkable results in data centers, becoming the core driver of revenue growth.

Its data center division contributed about 88% of total revenue in Q3 of FY2025, reaching a record $30.8 billion, a 112% year-on-year increase. This is not just the result of hardware but largely due to Nvidia’s innovations in AI chips, which have allowed it to firmly control market pricing power.

Nvidia’s Blackwell B200 chip not only reduces the cost and energy consumption of AI inference but strikes a perfect balance between performance and energy efficiency. Thanks to this, Nvidia can easily set high prices without worrying about competition.

With new technologies continuing to be launched, such as the Rubin chip following Blackwell, Nvidia remains at the forefront of the industry, always a step ahead of its competitors. Its product pricing is also quite “interesting”—for example, the price of a Blackwell GPU ranges from $30,000 to $40,000, a significant gap compared to AMD’s Ryzen. Such pricing ensures substantial revenue while reinforcing its market dominance and controlling the growth of income.

Nvidia Valuation: Buy Opportunity

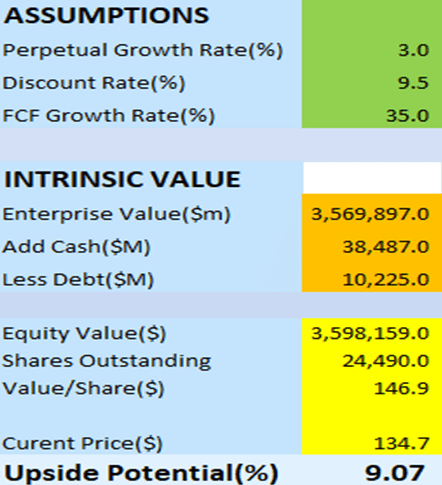

We’ve used the DCF model to value Nvidia, with the following assumptions:

- Perpetual Growth Rate: I set a growth rate of 3%, which is close to the global expected GDP growth rate (around 3.3%).

- Discount Rate: The discount rate is set at 9.5%, based on the company’s WACC, reflecting its capital costs.

- Free Cash Flow (FCF) Growth Rate: Given the company’s 72% annual compound growth rate over the past five years and 41.73% over the past 15 years, I’ve conservatively chosen a 35% growth rate to avoid overly optimistic assumptions.

Based on these assumptions, my valuation model suggests a target price of $146.9 per share, meaning the current stock price has a 9% discount.

At this valuation, Nvidia offers a “cheap” entry point for potential investors, with significant growth potential in the future. Additionally, the company’s P/E ratio (TTM) is 53.19, and its expected EPS by January 2026 is $4.43. Based on this, I have set the target stock price for 2026 at $236. This represents a 60.4% upside from my calculated fair value of $146.9.

Therefore, I believe Nvidia is a buy opportunity right now, and there could be attractive returns in the short term.

In conclusion, with OpenAI’s release of the O3 model, we have entered a new era of inference computation. This presents a significant opportunity for Nvidia. Now, with a market cap exceeding $3.2 trillion, Nvidia’s potential for growth remains vast as AI inference computation demand surges. For investors, Nvidia’s role in this new phase is crucial. With its comprehensive technological strength and powerful strategic partnerships, Nvidia is at the forefront of AI technology, ready to meet the global explosion in inference computation demand.

In summary, Nvidia is not only a leader in hardware but, thanks to its strong supply chain and innovation, has firmly grasped the pulse of future growth. For those looking to seize this technological revolution, Nvidia is undoubtedly a company to watch.

*This article is provided for general information purposes and does not constitute legal, tax or other professional advice from BiyaPay or its subsidiaries and its affiliates, and it is not intended as a substitute for obtaining advice from a financial advisor or any other professional.

We make no representations, warranties or warranties, express or implied, as to the accuracy, completeness or timeliness of the contents of this publication.

Contact Us

Company and Team

BiyaPay Products

Customer Services

is a broker-dealer registered with the U.S. Securities and Exchange Commission (SEC) (No.: 802-127417), member of the Financial Industry Regulatory Authority (FINRA) (CRD: 325027), member of the Securities Investor Protection Corporation (SIPC), and regulated by FINRA and SEC.

registered with the US Financial Crimes Enforcement Network (FinCEN), as a Money Services Business (MSB), registration number: 31000218637349, and regulated by FinCEN.

registered as Financial Service Provider (FSP number: FSP1007221) in New Zealand, and is a member of the Financial Dispute Resolution Scheme, a New Zealand independent dispute resolution service provider.