- EasyCard

- Trade

- Help

- Announcement

- Academy

- SWIFT Code

- Iban Number

- Referral

- Customer Service

- Blog

- Creator

From data center to AI inference, what amazing opportunities does AMD MI300X bring?

Recently, the popularity of AI has been evident to all, especially in the field of inference, where demand has exploded. Almost every industry is discussing AI, and technologies such as generative AI and large language models are changing our work and life. Inference tasks - simply put, AI calculates and judges based on existing data - have become the core of all this. More and more large companies, such as those that provide Cloud as a Service, are increasing their investment in AI inference, which has also driven the rapid development of the entire market.

So, where are the opportunities for AMD in this market? We are going to talk about its MI300X GPU. It is not only a “blockbuster” for AMD in the field of AI inference, but also has the potential to challenge the market leader NVIDIA in terms of performance and cost-effectiveness. Today, let’s analyze how AMD is using this opportunity in the AI inference market to drive revenue growth.

AI inference market expands greatly! How big is this cake?

What is AI inference? Why is it so important?

Let’s briefly talk about what “AI inference” is. In fact, inference is the process of AI making judgments and decisions based on the knowledge it has learned after completing a large amount of “training”. Just like when you talk to ChatGPT, it is not guessing randomly, but reasoning based on the knowledge it has learned before, and giving you a suitable answer.

For many companies, this is simply a “life infra”. Think about it, many companies are now doing things that require real-time decision-making, such as automatic speech recognition, image processing, intelligent recommendation, etc., all of which rely on powerful reasoning abilities. Moreover, with the development of technology, the demand for this market is becoming increasingly large.

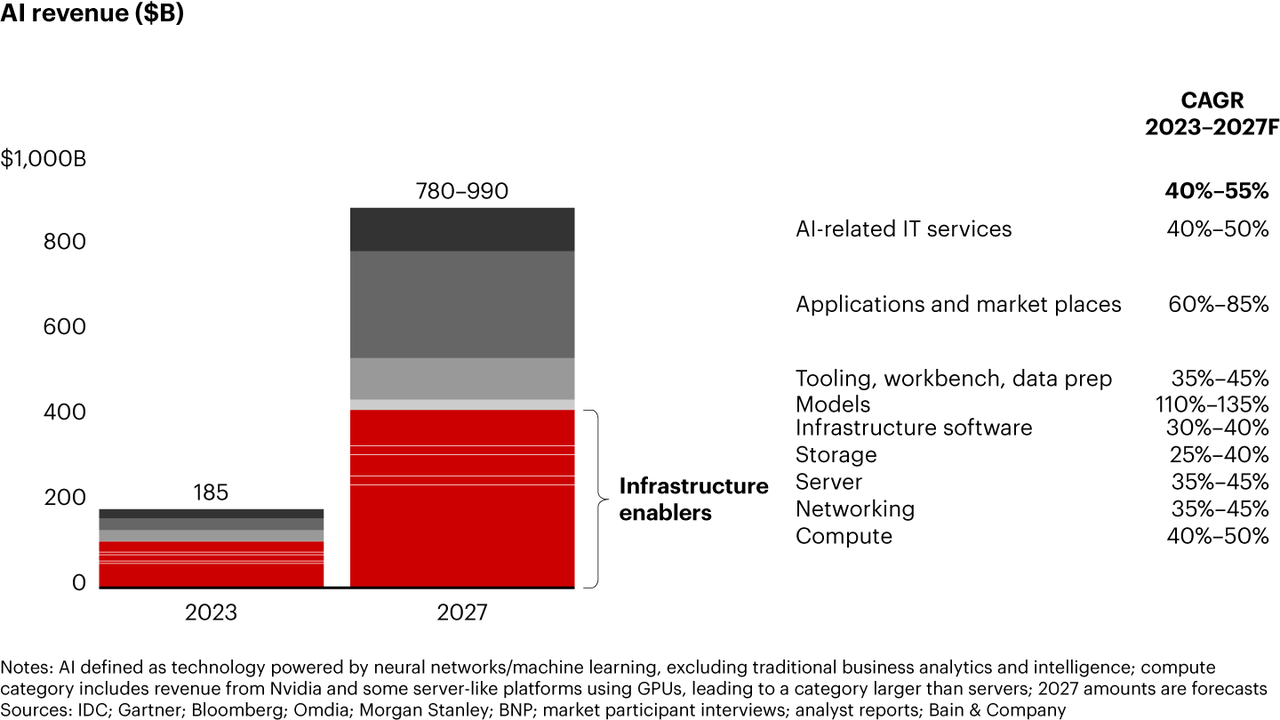

The prospect of the AI inference market is really exciting. According to industry predictions, by 2027, the size of this market may soar to 780 billion to 990 billion dollars. Yes, you heard it right, hundreds of billions! This growth rate is even faster than expected by many industries. Therefore, both Cloud Service companies and traditional enterprises will increase their investment in AI inference to enhance their competitiveness.

Why is there such a high demand for AI inference? Simply put, several major factors are driving the growth of this market. Firstly, Hyperscalers have a great demand for computing resources. Large companies like Microsoft, Meta, and Google are doing large-scale data processing and inference calculations, which require powerful GPUs to support. Secondly, the popularity of Cloud Services has led more enterprises to move AI inference tasks to the cloud, which has also driven the demand for efficient hardware. In addition, more and more software companies are increasing their investment in AI inference technology to help customers make more intelligent decisions and improve efficiency.

How does AMD stand out in the AI inference war?

Next, let’s take a look at how AMD seized this opportunity. Especially its MI300X GPU, which is a potential game changer in this field. So, what unique advantages does AMD have in this fiercely competitive market?

Firstly, the hardware advantages of AMD’s MI300X GPU are impressive. Let’s take a closer look at its technical features, especially its performance in handling AI inference tasks.

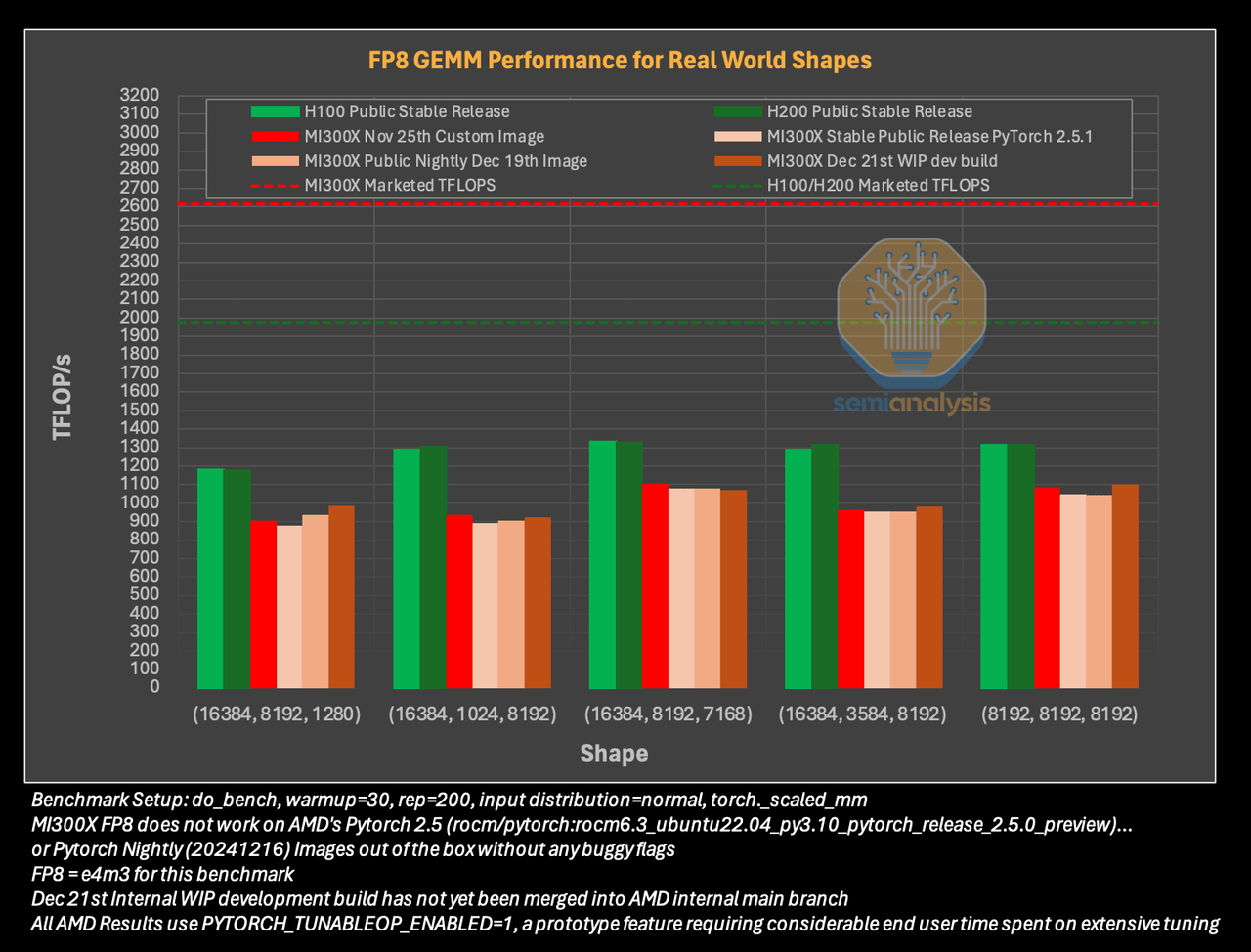

The 192GB HBM3 memory and 5.3TB/s bandwidth are the two most eye-catching features of MI300X. In comparison, NVIDIA’s H200 GPU only has 141GB HBM3e memory and a bandwidth inferior to MI300X, only 4.8TB/s. In short, MI300X has much higher memory capacity and data transfer speed, making it particularly suitable for inference tasks that require processing large amounts of data, such as generative AI and large language models (LLM).

For AI inference tasks, especially for generative AI (such as ChatGPT), the advantages of memory and bandwidth are crucial. With the surge in demand for generative AI and specific industry applications, MI300X provides a more efficient solution that can complete more complex inference tasks in a shorter time, especially in large-scale deployment.

In the field of AI hardware, NVIDIA is undoubtedly the current leader, especially in the GPU market. However, AMD is not sitting idly by, but challenging NVIDIA’s dominant position through its unique design.

Firstly, AMD’s Modularization chip design has obvious manufacturing advantages compared to NVIDIA’s monolithic architecture. AMD’s use of Modularization design allows each chip module to be individually optimized for production, resulting in higher yield and relatively lower production costs during the manufacturing process. For AI inference tasks that require a large number of GPU parallel computing, the improved cost-effectiveness means that AMD can provide more competitive product pricing, which is crucial for data centers and large-scale computing enterprises.

More importantly, the emergence of AMD’s ROCm platform has given AMD a strong advantage in competing with NVIDIA. Compared with NVIDIA’s CUDA ecosystem, ROCm is an open-source platform that allows developers more freedom to adjust and optimize their AI applications. For many enterprises, especially hyperscalers, flexibility and customizability are the core competitiveness of ROCm. Companies like Microsoft and Meta are gradually separating from NVIDIA’s CUDA ecosystem and turning to AMD’s ROCm platform, which has opened up more market opportunities for AMD.

The openness of ROCm has attracted many companies that want to avoid relying on a single supplier, which provides great potential for AMD’s expansion in the AI inference market.

Through the MI300X GPU and ROCm platform, AMD has demonstrated strong technological advantages in the AI inference market. It not only achieves performance improvement in hardware, but also takes the initiative in cost control through Modularization design. The emergence of the ROCm open source platform provides more flexibility for enterprises, reduces dependence on a single technology supplier, and enables AMD to win the favor of more enterprises.

Data center: How AMD leverages AI inference to move towards growth

With the widespread application of AI technology, Data centers are playing an increasingly important role in processing massive data and performing AI inference tasks. AI inference tasks require powerful computing power, and AMD’s MI300X GPU is designed to meet this demand. MI300X not only performs well in AI inference tasks, but also finds a crucial position in Data center architecture, especially in large-scale computing enterprises such as Microsoft, Meta, and Google, which have begun to widely adopt AMD hardware.

With the surge in AI inference computing demand, the dependence of data centers on high-performance hardware is also increasing. AMD’s MI300X meets this demand with its excellent performance and modularization design. MI300X not only provides powerful inference capabilities, but also has high scalability and flexibility to adapt to various computing tasks such as storage, analysis, and network processing. This enables MI300X to provide optimal performance in different application scenarios, further improving the overall efficiency of data centers.

For AMD, data center is not only a channel for selling products, but also a key area for future revenue growth. With the increasing demand for AI inference, the data center market has become an important growth engine for AMD. Through deep cooperation with global giants such as Microsoft and Meta, AMD’s influence in data centers continues to expand, and through long-term service and technical support, it consolidates its market position.

In short, Data center has become the core driving force for the development of AI inference, and MI300X GPU is an important driving force in this process. With the continuous increase in global enterprise demand for AI inference technology, AMD is expected to achieve greater success in the Data center field with its leading hardware technology, driving rapid revenue growth in the coming years.

Overall, AMD has great growth potential. If you are also optimistic about AMD and want to seize investment opportunities, BiyaPay’s multi-asset wallet will provide you with convenience. BiyaPay provides efficient and secure deposit and withdrawal services, supporting trading of US and Hong Kong stocks and digital currencies.

Through it, you can quickly recharge digital currency, exchange it for US dollars or Hong Kong dollars, and then withdraw the funds to your personal bank account for convenient investment. With advantages such as fast arrival speed and unlimited transfer limit, it can help you seize market opportunities in critical moments, ensure fund safety and liquidity needs.

What challenges and opportunities will we face in the future?

Although AMD has performed well in the AI inference and data center fields, it also faces many challenges. The most obvious competition comes from NVIDIA, which dominates the AI inference market with its deep technical accumulation and strong CUDA ecosystem. To break through this barrier, AMD not only needs to compete with NVIDIA in hardware, but also needs to attract developers through stronger software support.

In this regard, AMD’s ROCm platform provides an advantage. ROCm is an open-source platform that supports mainstream Deep learning frameworks and helps developers easily migrate to AMD hardware. Through this platform, AMD can occupy a place in large-scale computing enterprises and meet more efficient and flexible AI application needs.

In addition, technology integration is also one of the challenges. With the diversification of AI applications, hardware not only needs to have strong inference performance, but also needs to support tasks such as storage and networking. AMD’s MI300X provides greater flexibility and adaptability through modularization design, meeting these complex requirements.

Nevertheless, with the explosion of global AI technology, demand is also growing rapidly. AMD has the opportunity to occupy more market share through technological innovation and deep cooperation with enterprises. Cooperation with large companies such as Microsoft and Meta provides AMD with opportunities for hardware sales, technical support, and long-term service revenue, and also enhances its brand influence.

Overall, although facing challenges, AMD still has a huge opportunity to seize the rapid growth of the AI inference market in the coming years with its technological innovation and partnerships.

What is the investment outlook?

AMD is facing huge growth opportunities in the AI inference and data center markets. The launch of the MI300X GPU has enabled AMD to occupy an important position in the fields of ultra-large-scale computing and AI hardware. With the explosive demand for AI inference, AMD is expected to benefit from it, especially in the Cloud Service and AI accelerator markets, driving its revenue growth.

The AI inference market is expected to reach $780 billion to $990 billion by 2027, providing an unprecedented opportunity for AMD. With the increasing demand for data centers, AMD’s hardware share in the market is expected to continue to expand.

From a financial perspective, AMD’s profitability is expected to significantly increase in 2025 and 2026, with stock prices possibly reaching $150 and $240, respectively. Although AMD still has a gap in market share compared to NVIDIA, its future growth potential is very promising.

Overall, AMD is in a growth phase full of opportunities. If it can continue to innovate and expand its market share, AMD is expected to bring considerable returns to investors.

*This article is provided for general information purposes and does not constitute legal, tax or other professional advice from BiyaPay or its subsidiaries and its affiliates, and it is not intended as a substitute for obtaining advice from a financial advisor or any other professional.

We make no representations, warranties or warranties, express or implied, as to the accuracy, completeness or timeliness of the contents of this publication.

Contact Us

Company and Team

BiyaPay Products

Customer Services

is a broker-dealer registered with the U.S. Securities and Exchange Commission (SEC) (No.: 802-127417), member of the Financial Industry Regulatory Authority (FINRA) (CRD: 325027), member of the Securities Investor Protection Corporation (SIPC), and regulated by FINRA and SEC.

registered with the US Financial Crimes Enforcement Network (FinCEN), as a Money Services Business (MSB), registration number: 31000218637349, and regulated by FinCEN.

registered as Financial Service Provider (FSP number: FSP1007221) in New Zealand, and is a member of the Financial Dispute Resolution Scheme, a New Zealand independent dispute resolution service provider.